Last Updated on December 20, 2025

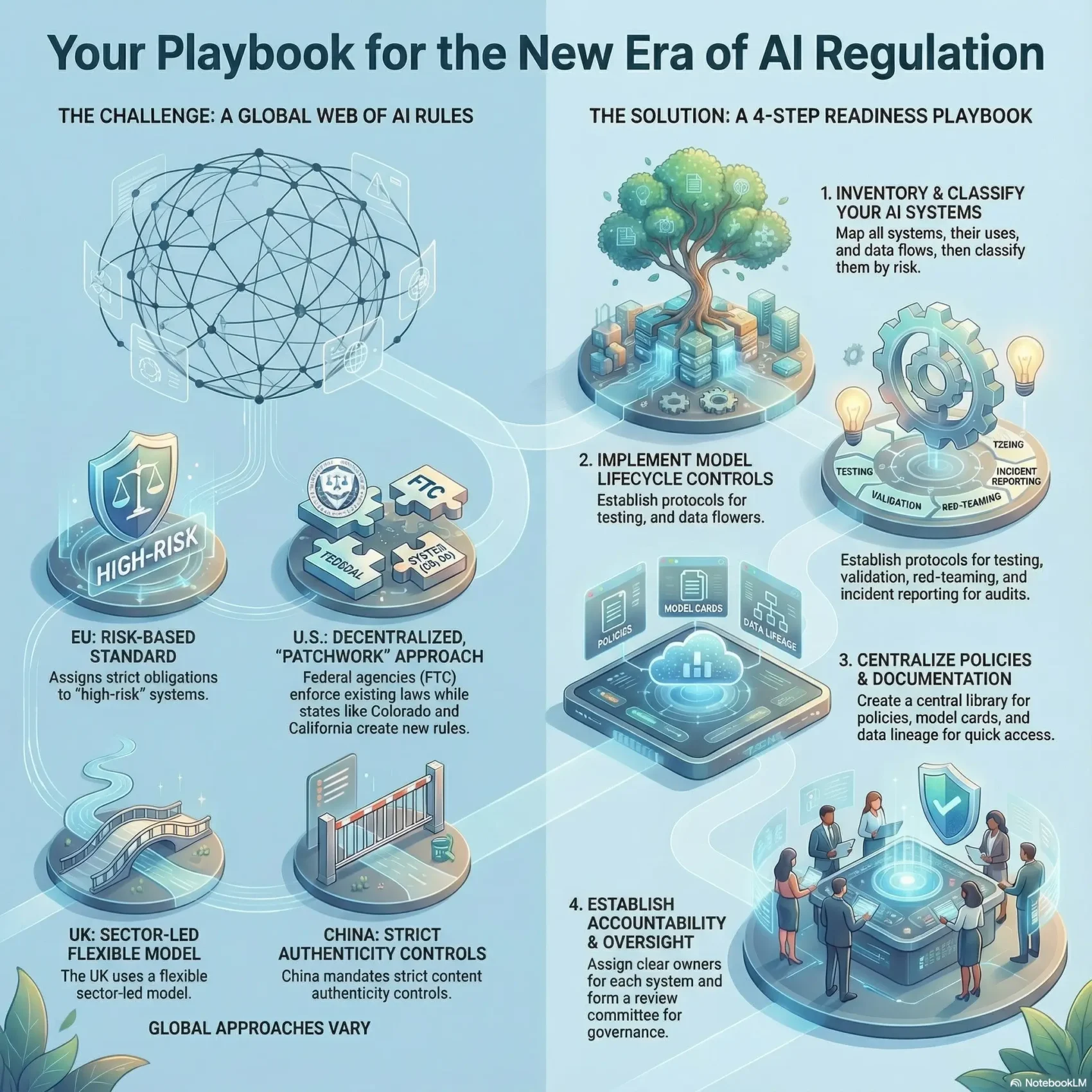

You need a clear playbook to navigate shifting rules across the U.S., EU, and states like Colorado and California. This short guide helps you turn complex policy and emerging regulations into practical steps for your team.

Start by mapping your highest-risk systems and the documentation they require. Expect tighter expectations around data, governance, and testing as federal signals, state mandates, and the EU AI Act converge.

We’ll show how to align development and vendor practices with those expectations. You’ll also learn how to build a cross-border strategy that protects market access and fosters innovation.

Along the way, find concrete checkpoints for procurement, audits, and transparency. If you want a deeper decision framework, see our practical guide on AI decision making to help prioritize actions now.

Key Takeaways

- Map your high-risk systems and documentation needs.

- Update data governance and testing controls to meet audits.

- Build a cross-jurisdiction strategy that scales.

- Use new rules as product and trust differentiators.

- Prepare procurement and funding plans to protect market access.

Why AI regulation matters now in the United States

Momentum in Washington and at the state level makes this a moment to act, not wait. Federal agencies are already applying existing law to model-driven tools, and states have moved fast with targeted statutes and rules.

What that means for you:

- Your use of artificial intelligence is subject to enforcement by agencies such as the FTC and FCC.

- State policy—Colorado’s high-risk rules and California’s transparency steps—creates multi-jurisdiction obligations.

- Congressional proposals may add focused requirements on political ads, likeness rights, and disclosures.

Don’t treat guidance as a future problem. Start with a short inventory: map systems that handle regulated data, flag high-risk uses, and note where decisions affect consumers.

- Inventory systems and data flows.

- Classify uses by risk and industry impact.

- Implement testing, logging, and documentation ahead of audits.

Quick guidance: aim for scalable controls, not one-off fixes. When in doubt, log actions, test performance, and be prepared to explain how a system uses information and manages risk.

The state of play: a global trend analysis you can act on

Global policy is shifting fast: what starts as a national strategy often becomes enforceable law within a few years.

From principles to hard law: how jurisdictions move from strategy to statutes

Many governments follow a clear arc. They publish national strategies and voluntary principles, try pilots, then turn successful pilots into binding rules.

Examples: the EU’s risk-tiered model, the UK’s sector-led approach, and soft-law frameworks from Singapore and Japan.

Innovation versus risk: the balancing act shaping policy worldwide

As artificial intelligence capabilities scale, governance demands grow with research and development cycles.

- Expect transparency, testing, and oversight to become baseline asks.

- Frameworks that mirror your internal processes cut time-to-comply.

- Compare jurisdictions to spot harmonization opportunities that save effort.

“Monitor principles today to predict tomorrow’s controls.”

Quick action: map where your systems touch regulated markets and document the design-time steps you took. Use global information trackers to standardize controls ahead of shifting regulations.

U.S. federal outlook: decentralization, guidance, and shifting priorities

Expect a decentralized playbook: agencies will use existing powers while Congress debates new frameworks. That means federal moves favor innovation and agency enforcement in parallel, rather than a single sweeping law.

Actions and signals

America’s AI Action Plan launched a broad agenda that ties funding and export strategy to national goals. OMB guidance can weigh state approaches in grant decisions and procurement neutrality rules may change how your tech competes for government work.

Authorities already in motion

Regulators are active now. The FCC clarified TCPA applies to synthetic voice calls and the FTC keeps enforcing claims and bias harms. Voluntary safety commitments from major vendors preview expectations on security testing and incident reporting.

What may come next

Congress is debating a range of bills from sandbox pilots to political-ads and licensing concepts. Track these developments closely: they could shift documentation, testing, and disclosure demands on your products.

- Strategy: map procurement and documentation needs early.

- Security: align testing and red-teaming with voluntary baselines.

- Information: keep a live register of federal guidance to inform your roadmap.

U.S. state momentum: Colorado’s template and California’s transparency turn

State laws are moving quickly, forcing product teams to bake compliance into design now. Colorado and California set two different approaches that you must plan for across jurisdictions.

Colorado: high-risk systems and bias controls

The Colorado AI Act targets developers and deployers of high-risk automated decision systems in areas like education, healthcare, housing, employment, insurance, and legal services.

Map where your systems make consequential decisions and document how you test for discrimination.

California: transparency, deepfakes, and likeness rights

California’s package focuses on disclosure and consumer protections. Bills cover election deepfakes, deceased-person likeness rights, dataset summaries for model training, and health disclosure rules.

If you serve California users, operationalize labeling and training-data summaries before 2026 to avoid steep penalties.

Copycat bills and divergence risks

Other states are drafting similar measures, but definitions and enforcement will vary. That creates operational risk if you apply a single approach nationwide.

- Inventory: list systems by areas affected and consumer impact.

- Document: capture testing, vendor proofs, and bias mitigation steps.

- Matrix: build a state-by-state compliance map to align disclosures and rights management.

EU AI Act: risk tiers, obligations, and the European AI Office

If you serve EU customers, you need to map which of your offerings fall into each risk tier now.

The Act sets four risk levels and ties specific obligations to each tier. Low-risk tools face light transparency duties. High-risk systems require robust data governance, testing, and conformity checks.

Provider vs deployer duties and documentation demands

Providers must produce model documentation, run tests, and complete conformity assessments for certain systems. Deployers must enforce controls, keep records of real-world use, and support post-market surveillance.

- Transparency: user notices for chatbots, deepfakes, and other features are required.

- Data: traceable lineage and model cards speed audits and support compliance.

- Proof: regulators will ask for risk management files, test logs, and mitigation steps.

Extraterritorial impact on U.S. businesses offering services in the EU

The Act reaches services accessible in the EU. That means your U.S. offerings may need EU-grade controls even if hosted outside Europe.

Actionable steps: map provider vs deployer roles in contracts, build a unified evidence library, and set up post-market monitoring with EU partners.

For practical examples of monitoring and oversight that affect workforce tools, see our piece on employee monitoring.

United Kingdom’s sector-led approach: flexible principles in practice

The UK favors a principles-first approach where sector regulators apply safety, transparency, and fairness to technology use.

This model leans on existing industry rules to keep oversight practical and aligned with real-world work.

What this means for you:

- If you already meet sector standards, compliance can be smoother—expect guidance that complements current obligations.

- Frameworks and sandboxes offer low-friction ways to pilot features in regulated industries.

- Track outputs from known watchdogs (competition, data protection, financial services) because coordination across regulators is common.

Innovation remains a clear policy goal. Align your governance and documentation to the UK principles to show readiness.

“Use regulator familiarity to speed safe deployment, but substantiate claims and test for foreseeable harms.”

Canada’s trajectory: from AIDA stall to AI Safety Institute and codes

Canada’s approach emphasizes building research capacity and voluntary standards while a bill stalls.

What this means for you: the AI and Data Act did not advance, but the government launched the AI Safety Institute in November to fund research and safety projects. Expect codes of practice and sector guidance to shape market expectations before any new legislation returns.

If you sell to the federal government, plan for the Directive on Automated Decision-Making. It forces risk assessments and impact tiers for government use of automated systems. That directive sets buyer expectations for testing, documentation, and evidence.

- Treat voluntary codes as de facto standards for privacy, transparency, and safety.

- Keep impact assessments, test logs, and incident workflows ready for procurement and audits.

- Align data governance to Canadian norms and harmonize with EU/UK baselines where possible.

Strategy tip: monitor institute research and competition authority guidance. These signals preview future compliance demands and help you adapt your product and procurement strategy.

Asia-Pacific contrasts: soft-law leaders and hard-line oversight

Asia-Pacific policy mixes light-touch frameworks with strict controls, so your regional playbook should be flexible.

Singapore leads with model frameworks. Its Model AI Governance Framework (2019) and a 2024 generative update give clear guidance for product teams. Use these templates for documentation, testing, and transparency when you offer services in the region.

Japan: human-centered principles

Japan promotes human-centered principles that stress dignity and social benefit. These soft rules shape sector guidance today and may become enforceable for specific harms.

Frame your features around user dignity and explainability to align with this approach.

Australia: practical safety tools

Australia released a voluntary safety standard and an AI Impact Navigator in 2024. These tools help you evaluate risk and improve development practices.

Expect proposed guardrails for high-risk uses and privacy reforms that boost transparency on automated decisions.

China: centralized oversight and labeling

China’s interim measures, deep synthesis rules, and algorithmic provisions target content authenticity and recommendation controls. A labeling standard for generated content is likely.

If your services reach China, add content-authenticity checks and clear disclosures to meet fast-changing policy demands.

- Mix frameworks and enforceable rules in your compliance matrix.

- Embed transparency and guidance into product flows now.

- Track generative technology updates to stay ahead of policy shifts in this part of the world.

Middle East initiatives: strategy-led acceleration in Saudi Arabia and the UAE

Saudi Arabia and the UAE are turning national plans into funded pilots and public programs. Their national strategy work pushes talent development, sector deployments, and ethical governance as core goals.

What this means for you:

- Expect strategy-first playbooks that translate quickly into initiatives and procurement opportunities.

- Align your governance and information requirements to government expectations to qualify for partnerships.

- Use local accelerators and sandboxes to showcase development-ready solutions.

Government sponsorship in the Gulf rewards ready-to-deploy technology with clear documentation and proof-of-concept results. Match your innovation narrative to societal benefit and ethical design to increase traction.

“Move fast to qualify for public programs; clear safeguards and localization win trust.”

Monitor proof-of-concept timelines and build relationships with institutions that set policy and funding priorities. Presence in these markets can also serve as a testbed you export to the rest of the world.

Latin America’s rise: Brazil’s comprehensive bill and Chile’s rights-forward draft

Latin America is moving from pilot projects to concrete laws that affect how you build and sell products. Brazil’s Bill 2338/2023 advanced in the Senate and targets excessive-risk systems, creates civil liability, sets incident reporting duties, and proposes a national body to oversee enforcement.

Chile’s May 2024 draft takes a rights-first tack. It promotes innovation while centering human rights and self-regulation elements under a broader digital law landscape.

What you should do now:

- Map the jurisdictions where your services operate and flag high-impact uses.

- Build governance and research guards to enable safe development and testing.

- Create transparency tools—model summaries, user notices, and localized education.

“Engage local regulators early to clarify reporting, acceptable testing, and documentation.”

Also plan for IP and provenance questions that may appear in companion laws. Track timelines and grace periods so you can prioritize compliance work and localization before enforcement begins.

Global governance anchors: OECD, G7/Hiroshima, UN, Council of Europe

Leading multilateral forums are defining guardrails that steer national strategies and industry behavior. These anchors give you a shared language for board reporting and product design. Use them to shape internal policy and budget requests.

Key international outputs—the OECD recommendations and the G7 Hiroshima principles—embed core principles and align oversight with human rights and international law. The UN draft resolution pushes states to adopt national governance approaches. The Council of Europe is drafting a Convention covering accountability and risk assessment.

- Convergence: expect focus on transparency, accountability, and safety across jurisdictions.

- Practical use: translate principles into checklists for design reviews and post-deployment monitoring.

- Resources: leverage these initiatives to justify funding for governance, testing, and protection measures.

These global anchors make your compliance strategy travel better across the world. Participate in consultations and turn high-level principles into measurable checkpoints so you can evidence progress to regulators and customers.

“Use recognized frameworks to reduce friction and show clear oversight.”

Cross-cutting obligations to watch: transparency, data, IP, and human rights

Your playbook should make transparency, data handling, and rights protections reusable across products. Treat these duties as shared modules you can apply across teams and releases.

Training data disclosures, watermarking, and content authenticity

Plan for dataset summaries now. California AB 2013 expects high-level training data notes: sources, IP status, personal information, and licensing. Watermarking and provenance belong in publishing pipelines as a basic authenticity layer.

Privacy-by-design and anti-discrimination controls

For high-stakes systems, embed privacy-by-design and bias testing by default. Require human oversight, appeal paths, and clear information trails for model versions, tests, incidents, and mitigation steps.

Intellectual property and likeness rights in the generative era

Secure permissions, log usage, and align your intellectual property policies with emerging likeness rights laws. Protect sensitive data with tighter access, retention, and minimization rules.

“Treat cross-cutting obligations as reusable components, not one-off fixes.”

- Do this: document data sources and licensing in a reusable format.

- Do this: add watermarking and provenance to content pipelines.

- Do this: standardize disclosures and safety notes for consistent transparency.

AI regulation readiness: your compliance and governance playbook

Begin with a clear map of systems and turn that map into repeatable controls your teams can use every day. This keeps work moving while you meet obligations across the U.S., EU, and states like Colorado and California.

Inventory and classify

Start an inventory that lists each system, its intended use, data inputs and outputs, and which decisions it affects. Classify each item by jurisdiction and by risk tier so you know where to prioritize effort.

- List systems and tied decisions.

- Tag each with applicable rules and a risk score.

- Use a simple matrix to show gaps at a glance.

Model lifecycle controls

Build testing protocols, run red-teaming exercises, and keep an incident playbook ready. Make security reviews part of every update and log test results for audits.

- Testing and validation steps.

- Red-team checks for safety and security.

- Incident reporting and post-mortems.

Policy stack and central information

Publish a compact policy library: acceptable use, vendor standards, and employee guidance. Centralize model cards, data lineage, and change logs so teams can find information fast.

Accountability and oversight

Assign clear owners, form a review committee, and keep audit trails for approvals. Designate escalation paths so incidents move quickly to the right team.

“Treat governance as an operational tool that reduces risk and speeds delivery.”

Operational timelines and heat map: what’s enforceable when

Start by mapping which markets will enforce new deadlines and how those dates affect your roadmap. Use that map to assign owners and set realistic milestones for product, legal, and security teams.

- Colorado: the Colorado AI Act is effective in 2026; follow upcoming rulemaking sessions now.

- California: SB 942 and AB 2013 take effect Jan 1, 2026; some measures (AB 2655, AB 1836, AB 3030) are already active.

- EU: obligations under the EU AI Act will phase by risk tier—sequence provider and deployer documentation accordingly.

Medium-term watchlist

Switzerland plans a proposal in 2025 and South Korea is advancing its act. Track these items because new legislation often influences other jurisdictions.

Practical checklist:

- Build a heat map of jurisdictions by effective dates and enforcement intensity.

- Monitor regulators’ calendars and consultation windows to adjust early.

- Set internal transparency milestones (model cards by quarter) and clarify which obligations trigger per system versus organization.

“Keep a living dashboard for leadership showing progress, blockers, and emerging rules by jurisdiction.”

Supply chain, exports, and partnerships: geopolitical guardrails for AI

Export controls and partner screening are critical checkpoints in any resilient technology strategy. The United States now links export policy to national security and alliance concepts. That affects how your technologies, chips, models, and services move across the world.

- Map your technology stack against export lists and partner-country eligibility.

- Build third-party diligence to stop leakage of controlled technologies through services or contracts.

- Align security, contracting, and compliance reviews with government screening lists.

If you offer managed services, be explicit about where data and model artifacts live and who can access them. Use information-sharing agreements to document safeguards and audit rights.

“A compliant, resilient stack is a market advantage—buyers pay more for lower risk.”

- Plan regional redundancy for chips and tooling.

- Train business development on red flags and approval workflows.

- Include export and security checks in product release gates.

Sector snapshots: where regulation will bite first

Some application areas face faster scrutiny because they touch safety, money, or civic processes. Focus your work where harm and scale intersect.

Healthcare, finance, employment, elections, and consumer protection

Healthcare: label generated patient communications per CA AB 3030 and keep clinical decision support supervised by qualified staff. Log alerts and human review points so you can show safe practice.

Finance: test underwriting and credit tools for bias and fairness. Document decisions and offer an appeal path to support audits and consumer trust.

Employment: audit hiring and monitoring tools for discrimination and explainability. Keep versioned records of tests and mitigation steps.

Elections: expect strict controls on deepfakes (see CA AB 2655). Put content moderation, timing windows, and clear labeling in your playbook.

Consumer protection: the FTC is active on deceptive or discriminatory use. Substantiate product claims, disclose hidden automation, and track user interactions.

- Prioritize areas where sensitive data and high-impact decisions converge.

- Define acceptable use, human oversight points, and fallback paths for each industry application.

- Build sector-specific services documentation packs to speed customer approvals and audits.

“Maintain logs of decisions and user interactions to support audits and individual rights requests.”

Actionable tip: integrate human rights into high-impact contexts, especially where access to essential services is at stake. That protects users and reduces enforcement risk.

Strategy for the next 6-12 months: prioritize, pilot, and prove

Turn near-term policy signals into a clear action plan that your teams can execute fast. Start by defining a common control set that meets EU high-risk expectations and U.S. state transparency, then localize controls for each jurisdiction you serve.

Set a U.S.-EU baseline, add state overlays, and pilot disclosures

Establish a single baseline: build controls that cover EU and federal needs, then add state overlays for Colorado and California.

- Pilot training data summaries and content labels to refine workflows before deadlines.

- Proof quick wins: publish model cards, run red-team checks, and test disclosure UX.

- Sequence rollouts by risk and customer impact, not by org structure.

Measure outcomes: bias, robustness, privacy, and transparency KPIs

Define clear KPIs for data stewardship, bias detection, system robustness, and privacy. Tie these metrics to board reports and product approvals.

- Automate evidence capture, versioning, and change management for compliance.

- Use governance reviews to enforce a consistent approach and enable safe innovation.

- Socialize the roadmap with customers to reduce friction and show tangible impact.

“Focus on pilots that prove controls and deliver measurable business value.”

Conclusion

Take action now: tie governance, testing, and documentation to clear timelines so your teams can deliver measurable outcomes.

You’re ready to move from awareness to action. Anchor development practice in documented tests, disclosures, and responsible data handling to meet obligations and protect users’ rights.

For businesses working cross-border, build a unified baseline and add regional overlays. Keep stakeholders informed with simple metrics, partner with vendors and auditors, and treat trust as a product feature.

Regulation will evolve, but an inventory, risk controls, and incident playbooks will pay dividends. Use pragmatic controls to enable beneficial use while respecting legal duties and user expectations.