Last Updated on December 1, 2025

Explainable AI helps you describe a model, its likely impact, and possible biases so you can build real trust in production.

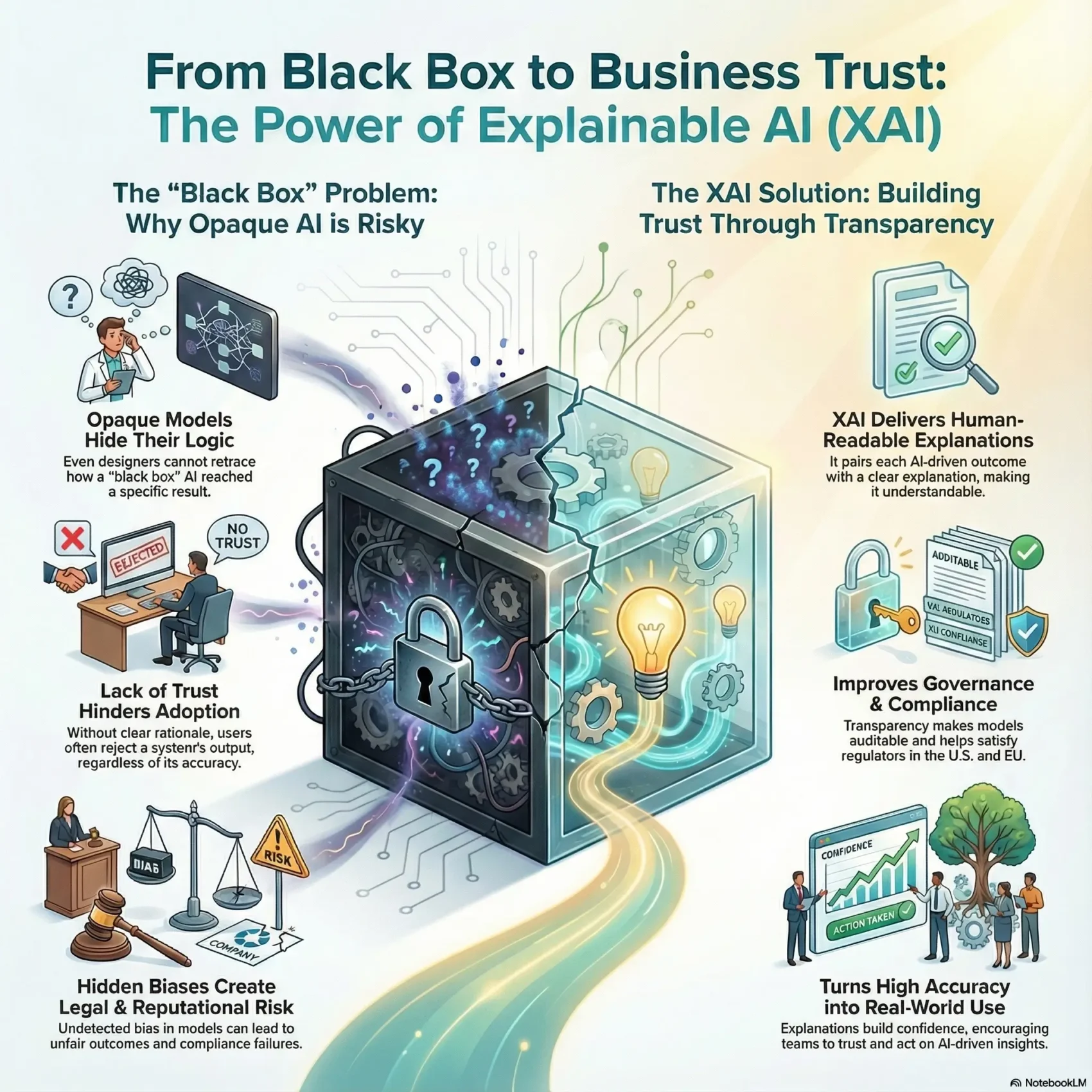

Many machine learning approaches act like black boxes, where even designers cannot retrace how algorithms reached specific results. That lack of visibility complicates accountability and auditability for your teams.

Explainability turns opaque systems into practical transparency. Good explanations illuminate model behavior, help you validate results, and make it easier to spot weaknesses in data and processes.

In short: use explainability to monitor models for drift and fairness, reduce legal and reputational risk, and create decisions you can defend to auditors, customers, and stakeholders.

Key Takeaways

- Explainable AI clarifies how models produce outcomes, boosting trust in business decisions.

- Transparency helps you detect bias and validate results across data and processes.

- Continuous monitoring stops model drift and preserves performance in production.

- Clear explanations improve governance, compliance, and stakeholder confidence.

- Prioritize quick wins that deliver better decisions, faster troubleshooting, and stronger documentation.

What You Need to Know About explainable AI right now

Opaque models can hide decision logic, leaving teams guessing why a prediction occurred. You need clear explanations so you can trust outcomes, meet compliance, and fix problems fast.

From black boxes to transparency: why explanations matter

XAI pairs each output with a human-readable explanation so your team can trace inputs to results. This transparency helps you spot bias, validate performance, and brief stakeholders with confidence.

Explainability vs. interpretability: how you understand a model’s output

Interpretability focuses on how observers link causes to an outcome. Explainability shows the steps the artificial intelligence took during the learning process so technical and nontechnical users gain practical understanding.

Regular systems vs. XAI: tracing each decision through your learning process

XAI adds tools and methods that record intermediate signals, inputs, and model choices. That traceability makes the process auditable across build, test, and deployment so you can debug faster and reduce operational risk.

- Quick win: start with attributions and simple example-based explanations.

- Value: better understanding shortens time-to-fix and improves trust in your models.

Why explainability drives trust, adoption, and compliance in your business

When people don’t get a clear reason for a system’s choice, they often dismiss its output no matter how accurate it is. You need explanations that turn model outputs into actions your teams will trust and use.

Human trust and user adoption: turning high accuracy into real-world use

Workers rejected a high-accuracy model in a McKinsey example until clear rationale arrived. That change shows how explanations lift adoption and daily use.

Provide consistent rationale to your users, share short insights with frontline teams, and you convert skepticism into practical wins.

Fairness, accountability, and transparency requirements in the U.S. and EU

Regulators require clarity. GDPR asks for “meaningful information about the logic involved,” and CCPA gives rights to know inferences and source data. These requirements make explainability a compliance priority.

- Auditability: explanations support internal and regulator reviews.

- Fairness: early signals reveal bias so you can reduce legal and reputational risk.

- Operational impact: explanations help you pinpoint where models fail and target fixes.

For practical guidance on building digital trust, see digital trust in business. Pair explanations with monitoring to quantify and cut ongoing risk.

XAI methods and techniques: from feature attributions to example-based explanations

Different techniques assign credit to inputs so you can see what really drove a prediction.

Feature-based explanations quantify each feature’s contribution to an output. Sampled Shapley approximates Shapley values and works well for non-differentiable ensembles. Integrated Gradients suits differentiable models and neural networks. XRAI builds region-based saliency maps that often beat pixel-level maps for natural images.

Example-based explanations

Nearest-neighbor explanations pull similar training instances from your set to show why a model behaved a certain way. They help you spot mislabeled data, find anomalies, and guide active learning.

LIME, DeepLIFT, and approach trade-offs

LIME is model-agnostic and fast for tabular and text data. DeepLIFT targets deep networks with higher fidelity. Choose based on speed, fidelity, and operational fit for your systems.

Limits and choosing the right method

Feature attributions are local; aggregate them across data for broader insight. Also watch for over-simplification and adversarial pitfalls. Combine feature and example outputs to validate signals before you change models in production.

“Use the right method for your model type and data modality to get useful, actionable explanations.”

Operationalizing explainable artificial intelligence across your ML lifecycle

You need repeatable processes to move explanations from experiments into production systems. Start by mapping where explanation methods fit: before training, inside model design, and after deployment.

Data to deployment: pre-modeling, explainable modeling, and post-hoc explanations

Analyze data pre-modeling to find bias and quality gaps. Build interpretable choices into architecture during training.

Use post techniques to generate human-friendly narratives for specific predictions. That mix limits surprises and makes results traceable.

Monitoring quality, drift, fairness, and model risk with actionable insights

Set up continuous evaluation that watches quality, drift, and fairness. Connect alerts to dashboards so teams see potential risk early.

- Quantify risk: thresholds, alerts, and impact scores for key models.

- Prioritize fixes: surface explanations that point to data or training issues.

- Automate checks: standardize tools and methods across environments.

Responsible AI vs. explainable AI: complementary practices for governance

Responsible governance sets policies and requirements before results exist. Explanatory evidence validates those rules after predictions run.

“Combine governance, monitoring, and clear handoffs so audits and stakeholder reviews are fast and reliable.”

Outcome: documented workflows, clear ownership of alerts, and feedback loops that update models faster and reduce operational risk.

High-impact use cases: healthcare, financial services, and criminal justice

When models supply verifiable reasons, clinicians, lenders, and courts can act with more confidence. You’ll see how clear explanations turn opaque outputs into usable insight across three high-stakes cases.

Healthcare: diagnostic transparency, image analysis, and shared decisions

In medicine, transparent model outputs like heatmaps over X-rays make image-based diagnostics safer. Clinicians use these signals to discuss options with patients and to validate model input against clinical data.

Outcome: faster case review, clearer handoffs, and higher trust in recommendations.

Financial services: credit decisions, wealth management, and fraud insights

Banks rely on model explanations to justify loan decisions and to document rationale for wealth advice. Explanations also surface fraud signals so investigators prioritize real risk.

Result: better auditability, consistent documentation, and fewer escalations from uncertain outputs.

Criminal justice: risk assessment, bias detection, and accountable processes

In courts and corrections, explanations reveal bias in training data and highlight which inputs drove a risk score. That clarity supports oversight and lets domain experts override a model when needed.

“Clear rationale in high-stakes systems reduces legal and reputational risk.”

- Capture inputs and highlight drivers so experts can validate or override a decision.

- Measure outcomes: improved accuracy on target cohorts and fewer false positives.

- Build playbooks with human-in-the-loop steps and consistent documentation for each case.

Tools and getting started: platforms, models, and practical next steps

Start small with concrete platforms that let you see which inputs move a prediction and why. Enable feature attributions first so you can read feature credit and surface surprising drivers in your outputs.

Using Vertex Explainable AI: feature attributions and example-based insights

Vertex provides feature-based attributions (Sampled Shapley, Integrated Gradients, XRAI) and example-based explanations via nearest-neighbor search over embeddings.

Sampled Shapley fits non-differentiable models. Integrated Gradients suits differentiable neural networks and large feature spaces. XRAI highlights salient regions in natural images.

Supported models and modalities

Attributions work across AutoML and custom models for tabular, text, and images. Example lookups require TensorFlow models that output embeddings.

This mix covers common model types you’ll use in production and helps map methods to your training stack.

Your first XAI roadmap

- Define baselines and enable attributions to track which features change outputs.

- Configure nearest neighbors to pull similar examples for odd predictions.

- Validate embedding inputs, review attribution stability, and wire alerts for drift or outliers.

“Run a low-risk pilot: prove value fast, then scale with documentation and access controls.”

Conclusion

A practical way to lock in value is to treat model rationales as first-class outputs alongside predictions.

Make explainability and transparency part of every release so stakeholders can read why a decision happened and give credit where it matters. This builds trust and reduces operational risk.

Choose techniques that fit your models — including neural networks and example-based lookups — and generate an understandable output that teams can act on.

Align goals, enable methods, aggregate explanations, and integrate them into your processes. Pilot one workflow, measure adoption and error reduction, then scale across your machine learning program to meet evolving requirements and keep systems reliable post deployment.