Last Updated on January 29, 2026

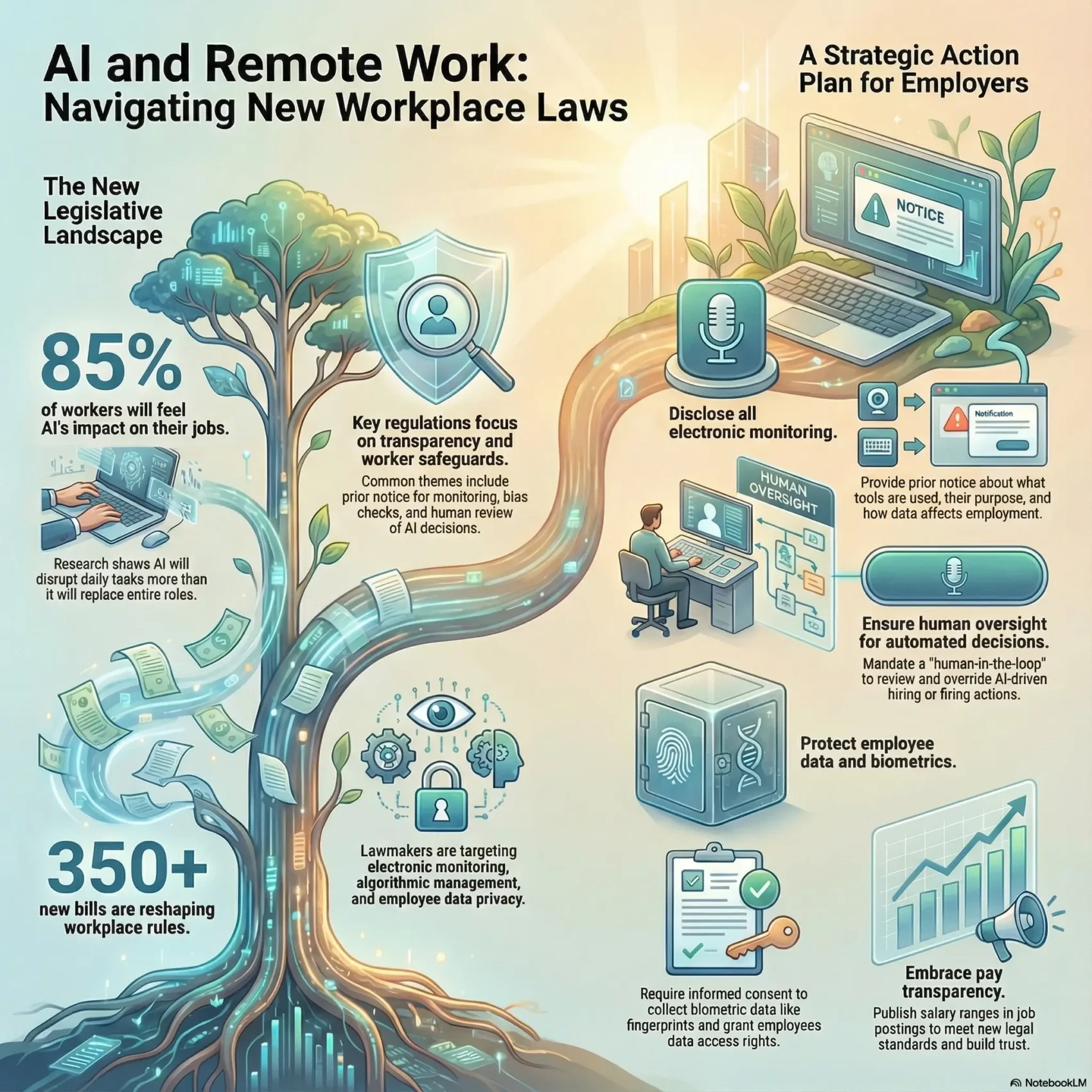

Brookings found more than 30% of workers could see half their tasks disrupted by generative artificial intelligence, and 85% may feel at least some impact.

State and federal drafters now track 350+ bills that touch electronic monitoring, algorithmic management, data privacy, and automation. That shift matters to your job and to how employers design teams.

KPMG legal teams warn that pay transparency, ESG checks, cross-border rules for remote roles, and shifting benefits will demand new compliance steps. Treating these changes as an afterthought raises clear risks for reputation and operations.

Read on to see which industries draw the most attention, which rules affect HR and legal functions, and how you can use new guardrails to build trust rather than merely check a box.

Key Takeaways

- Understand where policy makers prioritize worker safeguards and transparency.

- Know which industries face the most regulatory attention.

- Spot immediate compliance risks that affect operations and reputation.

- Learn how employers can turn rules into trust and better practices.

- See the main themes—monitoring, algorithms, privacy, automation—and their impact.

Why the future of work legislation matters to you right now

Hundreds of bills are moving fast across state and federal agendas to set rules on monitoring, AI decisions, and employee data. Brookings (10/2024) warns many employer-deployers are rushing AI adoption without safeguards or strong worker voice in exposed occupations.

You should care because these changes touch how you do your job, how hiring happens, and how your performance gets judged. New rules can give you notice, transparency, and appeal rights before automated tools affect employment outcomes.

Aligning with emerging standards reduces employer and employee risks. Expect enforceable disclosures, limits on biometrics, and algorithmic management rules that require human oversight and clearer documentation.

- You are living through a policy sprint that reshapes how your work is monitored and how decisions use your data.

- You benefit when rules demand notice, transparency, and appeal rights for AI-driven employment choices.

- You can plan career moves and training by tracking where protections and incentives for workers appear.

Pay attention now: these shifts will have near-term impacts on hiring, scheduling, productivity tracking, and long-term career planning. Use the rules to push for clearer employer practices that protect you and reduce unfair risks.

The stakes: AI disruption, worker voice, and underprepared policy landscapes

AI is changing tasks inside jobs more than it is replacing whole roles. That means you should plan for shifts in what you do each day, not just fear total job loss. Brookings data shows many workers will see large task-level change, especially in professional and clerical roles.

What Brookings research signals about task disruption and exposed occupations

“More than 30% of workers may face disruptions to at least half of their tasks, with 85% seeing at least a tenth affected by current-generation AI.”

You’re most exposed if you work in STEM, finance, law, architecture, engineering, or administrative roles. Manual, physically intensive roles tend to face slower change unless robotics speeds up.

Why worker voice, unions, and employer-deployers will shape outcomes

Where workers have representation, rollouts tend to include safeguards on data use, surveillance limits, and human review. Where employers rush AI under investor pressure, the risk of unfair employment decisions rises.

- Plan for task-level disruption: AI can write, code, summarize, and analyze with limited oversight.

- Push for impact assessments, pilot tests, and human oversight before AI affects performance.

- Participate in councils or bargaining to demand documentation, transparency, and appeal rights.

Trend snapshot: Where U.S. workplace tech policy is heading

Expect more comprehensive bills that bundle monitoring rules, algorithmic oversight, and privacy protections into single proposals. Colorado’s AI Act (2024) already sets a model by requiring transparency and impact assessments for high-risk systems used in employment.

Nine themes now shape the policy map: electronic monitoring; algorithmic management; data privacy and biometrics; automation and job loss; human-in-the-loop (HITL); education and retraining; just-cause discipline rules; sectoral rules for hospitals and schools; and transparency/impact assessments.

- You’ll see broader AI acts that sweep employment tools into state-level law, raising obligations for employers even if your company is based elsewhere.

- Cross-pollination will create model policies—prior notice, bias checks, and private rights of action reappear in many drafts.

- Sector-specific rules will target high-risk industries like health care and education, balancing safety with workers’ rights.

- New standards will push public disclosure of surveillance tools and require documented justifications for their use.

Takeaway: Use early examples to build internal governance now. Track impact assessments, inventory your tools, and align training so you meet incoming requirements and protect your workers and data.

Electronic monitoring: Guardrails for data, rights, and transparency

Electronic monitoring is becoming a central battleground for worker privacy and employer transparency. New models — NY’s 2021 notice rule, California bills in 2025, proposals in Maine, Massachusetts, Vermont, Washington, and the federal Stop Spying Bosses Act — set clear expectations for how tracking systems run in the workplace.

Notice and consent requirements: From “bossware” to responsible use

You must provide detailed prior notice about any monitoring, explain purposes, and say whether the data can affect employment decisions. Laws now push employers to disclose tools, retention periods, and how workers can access and correct their data.

Limits on surveillance scope, data minimization, and off-duty protections

Keep monitoring narrowly tailored. Avoid off-duty surveillance and sensitive areas, document minimization practices, and inventory systems like keystroke loggers, screen capture, wearables, and telematics.

Just-cause frameworks and corroboration standards for discipline

You cannot rely solely on monitoring outputs to discipline or terminate. Several proposals in Illinois and New York City require independent corroboration and give workers the right to see the underlying records before adverse action.

- Disclose performance standards and quota logic when monitoring tracks productivity.

- Run bias and harm assessments before deployment and on updates.

- Enable access, correction, appeal, and no-retaliation protections for employees.

- Map data flows, publish retention rules, and separate safety monitoring from personnel actions.

For practical guidance on deploying compliant monitoring and preserving trust, see this AI employee monitoring guide.

Algorithmic management and “No Robo Bosses”: Human oversight by design

When software scores candidates or sets schedules, you need a right to an explanation and a real human reviewer. California’s No Robo Bosses Act (2025) and Massachusetts’ FAIR Act push this idea. The Colorado AI Act (2024) adds transparency and impact assessments for consequential employment choices.

Transparency before and after decisions: Your right to explanations and appeal

You should see clear disclosures before any algorithmic tool is used. That includes what the tool decides, which data feed it, and its limits in plain language.

After a decision, you must get notice, a chance to correct input data, and a clear appeals path with timelines.

Prohibitions on high-risk tech and individualized wage rules

- No emotion or facial recognition where bills ban them for employment decisions.

- Avoid individualized wages based on surveillance; several states are moving to ban that.

- Ensure human reviewers can override system outputs for hiring, firing, scheduling, and performance actions.

Impact assessments across equity, safety, privacy, and labor rights

Pre-deployment assessments should cover economic security, equity, health and safety, privacy, and union rights. Maintain model cards, document training data provenance when feasible, and test for drift and disparate impact. Audit vendor contracts so worker protections and compliance flow down.

Data privacy and biometrics at work: Closing the worker rights gap

Data rules at work are shifting fast, and that changes what you can see, correct, and demand from your employer.

California’s CCPA (2018) stands out because it extends consumer-style rights to employees. Most other state privacy statutes still exclude workers, contractors, and applicants. That split creates uneven protections across the U.S.

CCPA coverage versus other state models

You should assume evolving rights to access, correct, and limit processing of your information, especially in California. Where laws exclude employees, adopt company policies that match stronger standards to reduce risk and confusion.

BIPA-style protections and retention rules

Biometric laws — notably Illinois’ BIPA — require informed consent and strict retention/destruction timelines. Many employers face class actions when they skip notice or keep fingerprints and face data too long.

- You must obtain consent before collecting biometric identifiers and explain retention in writing.

- Limit sharing and avoid selling employee data to third parties unless legally permitted.

- Adopt encryption, narrow access controls, and a published retention schedule for sensitive records.

Hiring rules for AI assessments and facial recognition

Several states now require notice and consent for AI-enabled hiring tools and video interviews. You should disclose how assessments work, give candidates a chance to consent, and align vendor contracts with breach-notification and deletion requirements.

“Adopt BIPA-like practices even where not mandated to lower litigation risk and build trust.”

Practical step: Map your data, document lawful bases for processing, and update contracts so technical and legal safeguards match worker rights.

Automation and job loss: Protective rules, incentives, and disclosure

New rules now carve out roles that cannot be replaced by automation and force clearer disclosure when technology drives layoffs.

Defining core job functions that can’t be automated away

Several states now bar AI substitution in licensed or critical roles. Illinois (2025) forbids replacing mental health pros and community college faculty. Oregon limits ads that present AI as licensed nurses.

You should codify core job duties in job descriptions and labor agreements so those functions are legally protected.

Taxes, subsidies, and WARN updates that spotlight tech-driven layoffs

New York’s 2025 WARN update requires disclosure when layoffs stem from automation. Expect incentives that either penalize displacement or fund retraining and apprenticeships.

Prepare to disclose technology-driven restructuring and meet enhanced notice rules in affected states.

Consent and compensation for digital replicas and training AI with your work

Multiple 2024–2025 laws and proposals require consent before using a worker’s likeness or work product to train models. California, Illinois, and New York already act on this; federal proposals like the No Fakes Act and COPIED Act target nonconsensual training.

- Secure written consent before creating digital replicas and plan compensation where required.

- Run automation impact assessments and publish results so workers see risks and mitigation plans.

- Engage unions and representatives early to negotiate fair transition terms and redeployment options.

You can reduce risk by framing technology changes as augmentation, not elimination, and by following guidance on adapting roles: job automation adaptation.

Education, retraining, and human-in-the-loop: Building a resilient workforce

Employers must treat retraining as a core obligation, not an optional perk, when new systems change job tasks. You should expect notice requirements and paid time for training under proposals like New Jersey’s 2024–2025 bills and federal initiatives that expand retraining funds.

Employer obligations: Notice, retraining, and priority placements

You must give timely notice when job duties or skill needs shift due to new tools. Offer structured training, paid learning time, and priority placement into open roles.

Practical steps: codify redeployment pathways, document skills portability, and track outcomes so workers see clear job ladders.

Public investment models and sector partnerships for AI upskilling

Federal proposals like the Investing in Tomorrow’s Workforce Act and Workforce of the Future Act push agencies to fund certificate programs and apprenticeships.

- Partner with community colleges and unions to align curricula to local labor demand.

- Use public grants and tax credits to offset costs and scale apprenticeships.

- Measure training impact with performance and safety metrics and refine programs.

HITL standards that preserve autonomy, safety, and performance

Align with emerging standards so humans remain accountable in safety-critical management and decision loops. Build HITL rules that require human review, record keeping, and clear escalation paths.

Embed ethics, privacy, and bias awareness into every course so workers understand limits and can spot harmful patterns in data or tool outputs.

Gender pay equity and pay transparency: Standards becoming the norm

Pay transparency is moving from optional policy to expected practice. KPMG predicts gender pay equity and published pay bands will be mainstream across North America and the EU. That shift means you should expect clearer job architecture and regular reporting.

If your company ignores these trends, you risk financial claims and brand damage. New law in many places already requires salary ranges in postings and periodic reports. Employers must run rigorous job evaluations that compare education, experience, effort, and conditions.

You can use transparency to build trust with candidates and current workers. Publish pay bands, align variable compensation to equity goals, and prepare time-bound remediation plans when gaps appear.

- Publish salary ranges and clear job bands for all roles to meet legal and market pressure.

- Run standardized job evaluations to close gender gaps across occupations.

- Prepare for audits: document remediation timelines and link compensation to equity goals.

- Train managers to discuss pay consistently and coordinate with recruiting and communications.

Unionization, collective action, and the new bargaining agenda

Labor unrest and higher visibility into executive pay are sharpening demands for stronger workplace safeguards. You should expect negotiations to cover AI deployment, data handling, and automation protections as core items.

Economic uncertainty, executive pay transparency, and strike dynamics

KPMG notes rising union activity amid inflation and restructuring. Recent bargaining — including high-profile writer actions in 2023 — put AI safeguards on the table.

- Prepare to bargain over algorithmic transparency, appeal paths, and monitoring limits.

- Engage early with worker representatives and form joint tech committees to reduce conflict.

- Budget for training, redeployment, and severance tied to automation and pilot programs.

- Ensure any tech rollout complies with labor law and does not chill organizing or identify protected activity.

“Collective agreements now often include data rights and HITL rules that bind employers across sites.”

Watch public scrutiny on layoffs tied to tech and use collective bargaining to set clear rights, retention limits, and shared governance for impact reviews.

ESG transparency and responsible supply chains: Compliance meets technology

KPMG notes that rising transparency laws force firms to build tech systems that verify supplier claims and spot fraud. These policies span Australia, the UK, EU, Canada, and the U.S., and they raise what regulators and customers expect from industries that rely on complex sourcing.

Supplier due diligence, human rights reporting, and verification systems

You should implement supplier due diligence programs that verify policies on forced labor, harassment, and working conditions across tiers. Use data pipelines to collect attestations, flag anomalies, and trigger third-party checks or on-site audits.

- Build audit-ready records: adopt standards and documentation that support public reporting and regulatory review.

- Use technology: case management systems help track findings, corrective actions, and timelines to create a defensible trail.

- Integrate worker voice: include direct information from employees and contractors to surface issues that paperwork can miss.

- Mitigate risks: train procurement, add contractual clauses, and set remediation timelines to reduce reputational harm.

Expect ESG disclosures to become more prescriptive. Maintain a global baseline that meets the strictest regional rules and publicize an example where stronger compliance improved both worker outcomes and supply reliability.

Wellness, mental health, and workplace safety: Legal obligations expand

Your workplace must treat psychological safety and ergonomic risk with the same rigor as physical hazards.

Regulators and advisors now press employers to broaden health programs after the pandemic. That includes accommodations for neurodiversity, substance issues, and clearer protocols for bullying and harassment.

Practical action: update policies, map escalation paths, and train managers to separate performance coaching from harassment response.

- Update accommodations, document decisions, and coordinate with legal to meet confidentiality and law requirements.

- Offer benefits like EAPs, teletherapy, and scheduling flexibility so employees can access care quickly.

- Adapt safety programs to include cognitive load, remote ergonomics, and fatigue risks for hybrid teams.

You can cut legal exposure by using standard investigation protocols and keeping clear records of actions taken. Gather anonymous feedback and track wellness metrics while protecting privacy.

Make it stick: design reasonable workloads, build recovery time into roles, and have leaders model healthy habits so changes are cultural, not just on paper.

Remote work risk and compliance: Cross-border rules, taxes, and policies

Remote hiring and cross-border schedules create new tax, payroll, and legal puzzles for any distributed team.

KPMG predicts programs will expand, but governments have not eased cross-border requirements. That gap means you must build governance that keeps operations and people safe.

Designing flexible programs and guarding against pitfalls

Define eligible locations and maximum roaming durations to limit tax residency and permanent establishment risk.

“Treat roaming and unlimited PTO as controlled benefits, not open permissions.”

Documentation, payroll harmonization, and benefits alignment

- Document work locations, equipment use, and expense rules to support audits.

- Harmonize payroll cycles and benefits to prevent inequities and withholding errors.

- Vet collaboration tools and data transfers for cross-border privacy and security.

- Clarify unlimited PTO processes, blackout dates, and payout rules to avoid disputes.

You should coordinate with finance and tax advisors and train managers to avoid proximity bias. Use self-service portals so employees stay in sync on payroll, benefits, and location records.

Harmonizing policies across employers: From acquisitions to job architecture

When companies combine, you face a tangle of inherited rules that block hiring, flexible schedules, and clear pay decisions. KPMG expects HR and Global Mobility teams will spend many years aligning legacy policies and compensation after deals.

Aligning compensation, benefits, and role bands to meet transparency rules

Start with a clean inventory. You should list all inherited policies on pay, hours, leave, location, and variable compensation to spot conflicts and gaps.

- Create a unified job architecture with role bands, salary ranges, and competencies that meet transparency rules across states.

- Plan multi-year harmonization workstreams with governance, change management, and stakeholder communications.

- Assess constructive dismissal risks where contractual terms change and manage transitions carefully.

- Consolidate payroll and benefits vendors and cycles to streamline administration while preserving compliance.

- Coordinate with labor relations so bargaining and management questions are anticipated, not reactive.

Use technology to keep a living policy library, version control, and to measure success against pay equity, mobility, hiring speed, and exception rates. Set global baselines that exceed local minimums to reduce patchwork complexity and improve fairness.

Pensions, benefits, and compensation shifts in an aging workforce

As demographics shift, retirement plan design is now a frontline compliance and talent issue for you and your team. KPMG notes aging populations and tighter rules are pushing up pension funding costs and legal oversight.

You should reassess retirement plans to balance cost, compliance, and generational preferences. Consider phased retirement, portability features, and socially responsible investment options to meet a changing workforce.

Manage any plan wind-down with legal counsel to avoid ERISA and tax pitfalls. Prepare plan amendments and timely employee notices before regulatory or market shifts force rapid changes.

Practical steps you can take include stronger reporting for pension funding, clearer participant communications, and simpler choice architectures. Align total compensation—base, variable, and benefits—with transparency and equity goals across roles and geographies.

- Simplify plan choices and offer decision support to raise engagement.

- Evaluate alternative benefits such as student loan help and caregiving support to retain talent at different life stages.

- Expect greater scrutiny on fees, investment options, and participant outcomes in coming years.

Coordinate benefits strategy with broader workforce planning and remote policies so changes to retirement or other pay elements fit your hiring and retention goals.

Your strategic action plan: Practical steps for employers and employees

Start with a short, practical plan that aligns governance, tools, and training to legal and ethical requirements. Focus on quick wins and a staged approach so you meet urgent requirements while building mature oversight.

For employers: impact assessments, governance, and transparent AI adoption

Create a governance board with legal, HR, IT, and worker representation to set policy and approve deployments.

- Use standardized impact assessments that cover bias, privacy, safety, labor rights, and performance impact.

- Publish plain-language notices, a registry of tools, and clear contact points for questions and appeals.

- Embed human-in-the-loop rules: define which decisions need review, override criteria, and escalation SLAs.

- Negotiate vendor terms for data minimization, deletion on termination, audit rights, and compliance warranties.

For workers: exercising data rights, understanding monitoring, and leveraging training

Know your rights to access data, request corrections, and appeal decisions without retaliation.

- Ask for plain notices about what tools collect and how they affect performance or hiring.

- Demand training so managers and teams interpret outputs and avoid overreliance on automated scores.

- Join governance or worker councils to shape the employer approach and reskilling plans.

Measure and iterate: track equity and performance metrics after deployment and adjust your approach based on evidence. Prioritize clear notices, a tool registry, and baseline assessments as the first part of a sustainable program.

Future of work legislation: What to watch next

Watch how states stitch monitoring, algorithm rules, and privacy into one comprehensive bill—these packages change employer duties fast.

Pay attention to laws that force public disclosure or registration of surveillance and decision systems. Many drafts also ban automated individualized wage-setting and add BIPA-style biometric limits.

You should track sector rules in education, health care, logistics, and public safety where risks are highest. Expect wider adoption of AI impact assessments modeled on Colorado’s approach.

Keep a focus on rapid generative intelligence rollouts. Set internal pause points for high-risk technology pilots and use early enforcement actions as an example to refine controls and training.

- Watch for combined state frameworks that merge monitoring, algorithmic management, and privacy.

- Monitor bans on high-risk tools and stronger appeal rights for employees.

- Plan scenario exercises over the next years to stress-test compliance and workforce shifts.

Actionable tip: use public settlements and agency guidance as concrete examples to tighten policies, map data flows, and update training so you stay ahead of changing technology risks.

Conclusion

This moment asks you to treat AI governance and monitoring as a core capability, not a sidebar.

Brookings shows urgency and worker voice matters. KPMG predicts pay transparency, ESG checks, and remote expansion will drive multi-year harmonization across roles.

Act now: lean into transparency about tools, data, and decisions to build trust with workers and stay ahead of law. Co-design change with staff, align training and HITL rules, and harmonize pay and policy across units. Measure equity, safety, performance, and retention at every level.

You can make a real impact by prioritizing ethics, continuous learning, fair pay, and wellbeing. Treat governance as part of strategy and your job becomes keeping technology useful, safe, and trusted.