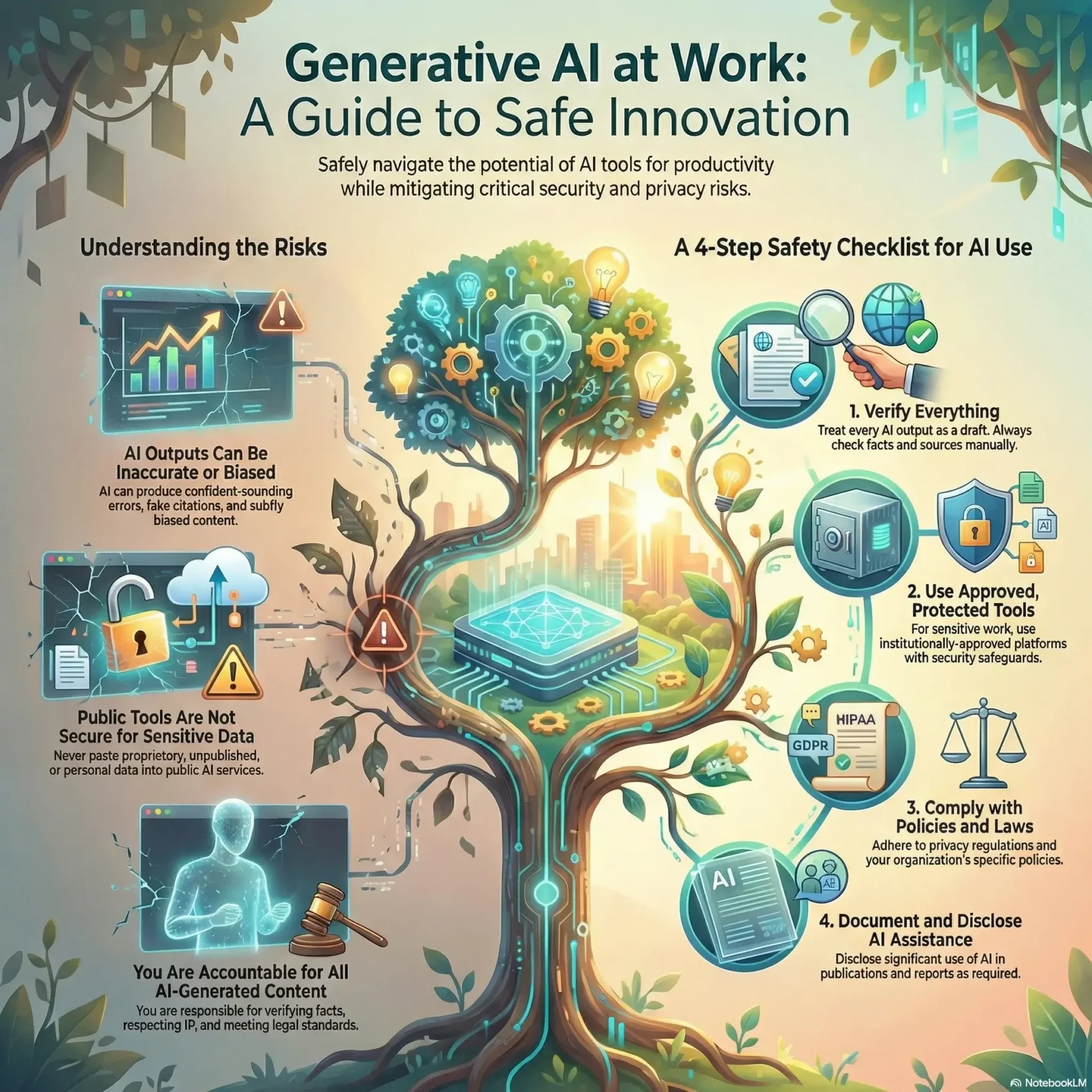

You are about to get a clear, friendly primer on how generative tools fit into your daily work. This intro explains why innovation matters and how to protect privacy, security, and accuracy while creating new content from prompts.

Think of this as a short roadmap. It shows when to use public tools and when to keep research inside protected systems. You’ll learn the simple questions to ask before you paste sensitive data into a prompt: What is this? Who can see it? How might it be reused?

Universities and enterprises stress that you remain responsible for any output. Verify facts, respect intellectual property, and follow laws like FERPA, HIPAA, or GDPR. For safe practice, explore within approved environments and disclose the role of these tools in research or publications.

Key Takeaways

- Use tools to speed routine work, but keep human judgment central.

- Protect sensitive data by choosing institutionally approved options.

- Verify accuracy and cite sources; you are accountable for outputs.

- Ask a few plain questions before sharing information in a prompt.

- Align your use with institutional principles and applicable law.

- For privacy resources, consider a tailored privacy policy generator at privacy policy generator.

Understanding the innovation-security tradeoff in generative artificial intelligence today

Tools that convert prompts into text or images can save time while introducing new risks. You’ll see how prompt-based systems turn simple instructions into polished output and why those results still need your judgement.

What these tools do and how they work

Prompt systems predict likely words or pixels from patterns learned in training data. That lets a single tool draft text, format summaries, or create images from short directions.

Opportunities and risks in practice

These tools shine for brainstorming, outlines, and quick formatting. They speed routine work and help you iterate faster.

But results can be inaccurate, unverifiable, or biased. Training data limitations produce confident errors and subtle persuasion. Public tools may store prompts, so uploading research data or unpublished manuscripts can feel like public disclosure.

Trust but verify: ask for sources, cross-check facts, and flag perfect-looking citations that lack a traceable reference.

“Perfectly formatted citations that don’t exist are a common signal of risk.”

- Use tools for drafts and iteration.

- Exercise caution with sensitive data and final publications.

- Verify outputs before you reuse content or share results.

generative AI guidelines: principles, policies, and ethical foundations

Practical principles help you balance speed and trust when tools assist your work. You should treat these principles as a checklist every time you use a tool that helps produce content or images.

Accountability and accuracy: If your name is on a paper, slide, or report, you must verify the facts, figures, and interpretations before you publish. Check sources, validate statistics, and correct any inaccurate output.

Bias and limitations: Scan outputs for stereotypes and omissions. Compare multiple sources, ask for counterarguments, and test different prompts to reveal blind spots. These steps help reduce biased results across text, images, and data.

Intellectual property and attribution

Do not upload unpublished research or proprietary property to public tools. Generated material can echo existing work, so review outputs for originality and cite true sources. Document any material assistance with prompts, tool versions, and edits when that assistance affects research or publication.

“Keep authorship human: you are responsible for methods, claims, and accuracy.”

- Follow institutional policies and legal standards like FERPA, HIPAA, and GDPR.

- Record provenance for substantive assistance to preserve integrity and comply with publishing standards.

- Adopt simple rules: if your name appears, you verify the information.

Data privacy, security, and access: using tools with caution and protection

Before you paste anything, decide where it belongs. Treat public tools like open forums and reserve non-public research, grant drafts, and unpublished manuscripts for protected environments.

Public tools vs. protected environments: Do not upload restricted information to public services. Use enterprise platforms or provisioned model access when data sensitivity requires it.

Regulatory mapping and university policies

Map your scenario to relevant rules: FERPA for student records, HIPAA for health data, and GDPR for personal data from EU residents. Follow university policies and the standards your unit enforces.

Enterprise safeguards and approved pathways

Use Microsoft Copilot only when the enterprise shield appears on your @ku.edu or @kumc.edu account. That icon signals encryption, identity and permission enforcement, and no onward training of prompts.

For protected research, route workloads to approved platforms like Databricks (HIPAA-capable) or Azure OpenAI provisioned by Research Informatics. Those options give audit trails and controlled access.

Practical security practices

- Verify encryption, retention, and sensitivity labels before you share data.

- Ensure identity and permission controls limit access to authorized users only.

- Coordinate with departmental tech support or your CISO for edge cases.

“Don’t paste unpublished results into public tools; use shielded, provisioned environments for sensitive work.”

Responsible use across research, teaching, and administration

Good stewardship in research and campus work starts with clear disclosure and vetted tools. You should document when a tool contributed materially to a project and record prompts or versions if that assistance affects results.

Research integrity

Standardize disclosure in manuscripts and reports. Follow publisher rules: major journals do not accept tools as authors, so you remain responsible for claims and methods.

Use screening tools like iThenticate carefully and prioritize primary-source checks over automated flags.

Teaching and learning

Set syllabus policies that state permitted use, required attribution, and prohibited practices. Avoid unreliable plagiarism detectors that can mislabel honest work and harm non-native speakers or students with disabilities.

Administrative work

Choose institutionally vetted tools for staff workflows so privacy, security, and standards are met. Limit access and verify retention and encryption before you share sensitive information.

Governance in practice

Align with unit policies, seek mentors’ guidance, and escalate tough questions to your research office or CISO early. Build simple checklists so individuals know what to do and when to ask for help.

For practical decision help, review institutional decision-making resources on research via decision-making for research.

Conclusion

Wrap up your practice by pairing productivity tools with clear checks on privacy and provenance. Choose protected environments for sensitive data and avoid public uploads of research or proprietary property.

Treat every output as a draft: verify facts, correct errors, address biases, and cite authentic sources before you reuse content or publish results.

Disclose any material assistance and keep authorship human to preserve integrity and meet publisher and university policies. Balance the opportunities of new content creation with the need for protection, accuracy, and compliance with regulations.

Stay current with guidance from your research office, IRB, and IT so your use of tools reduces institutional risk and improves the quality of your work.